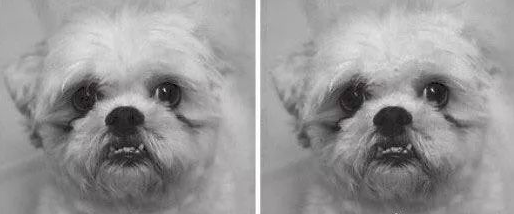

In the process of intelligent recognition, even the most superior AI will fall into a puzzle because of system defects. The famous scholar and the author of the best-selling book Us and them: The Science of Identity David Bereby stated that artificial intelligence can be "deceived" because their built-in reasoning mechanisms are distributed in a modeled state. So from this perspective, as long as the verification conditions in the established model are met, some samples that originally did not meet the requirements may also be read and identified by the system. This means that in the process of intelligent reasoning, artificial intelligence will also have "errors", and how to minimize these errors requires researchers to improve the recognition system through multiple channels. Regarding the errors of artificial intelligence in reasoning, a team composed of American universities and social research institutions did a very interesting experiment. The staff first developed a set of neural network models that can recognize image text according to certain standards, and then they entered the specially processed pictures into this model and ordered the artificial intelligence system to recognize the pictures. As shown in the figure, from the naked eye of humans, there should be no feature difference between the two pictures. But for this set of artificial intelligence equipped with a special neural network, the final result is surprising: In this test, the AI ​​system identified the image on the left as a "dog", and the image on the right is Was judged as "ostrich". In fact, there are only subtle pixel differences between the two images, and the mirror body and the shooting equipment used are completely the same. That is to say, in this experiment, the difference in picture pixels is the direct cause of misleading artificial intelligence to make wrong reasoning. Similarly, Jeff Crewe, an assistant professor in the Department of Computer Science at the University of Wyoming, and two other researchers, Ruan Ang and Jason Yosinski, jointly wrote an article on artificial intelligence. In this article titled "Artificial Intelligence is actually good to deceive", Crewe gave an example that they jointly developed an intelligent system for recognizing images. When the researchers handed a strip-shaped wavy picture with two colors of yellow and green to the system for recognition, they found that the recognition system used a 99.6% certainty to convert this "Watercolor painting without subject" is recognized as "starfish"; when faced with another picture full of messy mixed spots, the AI ​​system made another mistake-it deduced the subject in the picture as "leopard". And the authenticity is nearly 100% guaranteed. Obviously, both the "Puppy Experiment" and the image recognition experiment led by Crewe have reflected to people such a phenomenon that artificial intelligence will also be interfered by model defects in the process of analysis and reasoning. For example, in Crewe's experiment, the recognition model set by the researchers must be insufficient in sample definition. In this regard, another artificial intelligence scholar, Soren Barocas from Princeton University, believes that Crewe’s model does not set the limits of "life" and "non-life", which makes artificial intelligence unable to A sample is effectively distinguished. Barocas explained: “For the image full of messy spots, if the Crewe team’s recognition model set the rule of'many spots = leopards', then when people take this picture When entered into the model, the recognition system will detect a lot of spots on the picture, and then conclude that the picture is a leopard." It can be seen that the logical reasoning of artificial intelligence is strongly stylized. Due to the limitations of the established model, even the most superior AI system will have deviations in judgment. In life, the defects caused by this kind of reasoning mechanism may also cause great inconvenience or other hidden dangers to people. For example, in the anti-intrusion intelligent identification system, some malicious people can pass the loopholes in the identification model to disguise themselves with a device suitable for the model, and then they can successfully pass the inspection of the intelligent agency. Therefore, how to use rules to avoid similar loopholes has become a topic that researchers need to focus on. Obviously, in Balocas's discourse, we have been able to strip out some of the inducing factors that solve the artificial intelligence deception. According to him, the neural network built into this system plays a key role in making judgments and inferences about unknown things. Once there are some exploitable vulnerabilities in this network, when there is an "incorrect adaptive sample" that enters the inspection phase, the wrong sample will be accepted and interpreted. For example, for a certain neural recognition network, we set the following rules: (1) Students wearing red clothes get 1 apple each (2) Students in yellow clothes get 2 apples each (3) Students who wear non-red clothes are not allocated apples Obviously, the intervention of the third rule has had a wrong impact on the entire judging system. For a student wearing yellow clothes, if the distribution is executed according to the second rule, he will get 2 apples; if defined according to the third rule, he will be excluded from the distribution principle. In a neural network, similar rules that are not subject to weighting restrictions are likely to be executed at the same time. If this network model is used to allocate apples, it will inevitably appear that some students in yellow clothes hold 2 apples, and the other students in yellow clothes do not. The chaos of Apples. Therefore, in a model system, there are conflicting factors between each other, which will bring great negative effects to the model. In view of this situation, in fact, just add a "If there is a conflict between the rules, the rule (1) shall prevail", and all this will be solved. In addition, among the above-mentioned rules, there is also the problem that there is no in-depth restriction on the sample. For example, if a doll in a red dress is mixed among those to be assigned, the AI ​​system will also issue an apple to the doll in accordance with established regulations. So in response to this loophole, the designer needs to do the same as before, that is, to add rule options to limit the assignees to "human beings who can breathe." Therefore, artificial intelligence is deceived, in fact, more factors are restricted by the defects of the built-in model. Due to the limitation of sample rules, artificial intelligence will also be misled in the process of reasoning. Due to the modularization of the recognition system, the AI ​​system judges the samples through the set points in the model to find the correspondence. Under this condition, some results that do not meet the requirements may also be due to the key points. successfully passed. To solve this problem, designers need to develop more accurate, more verification nodes, or develop verification rules that are "linear", "faceted", or even "three-dimensional", and this requires research The author conducts more in-depth research.

The Smart board For Teaching is a teaching device specially designed for educational activities. smart board has all the functions required for the teaching activity scene, such as whiteboard writing, screen sharing, annotation, video playback, teaching tools, VR laboratory demonstrations, etc.

Smart Interactive Whiteboard can help us work and teach more efficiently.Companies,schools,universities,exhibition centers,business centers,Smart board for school,Interactive board for classroom,Smart version,Smart board interactive,Smart board for home can be used everywhere.

Smart board for school,Interactive board for classroom,Smart version,Smart board interactive,Smart board for home Jumei Video(Shenzhen)Co.,Ltd , https://www.jmsxdisplay.com