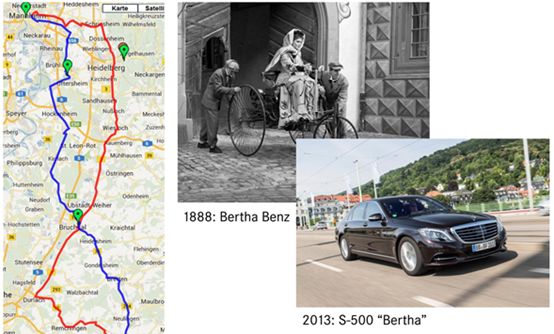

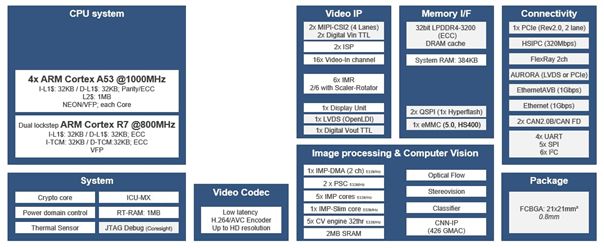

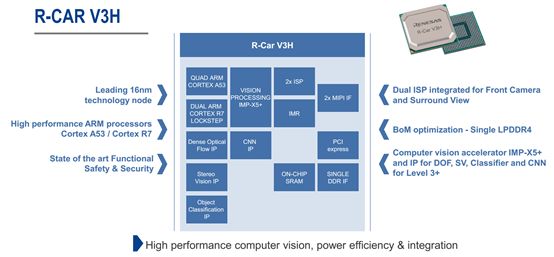

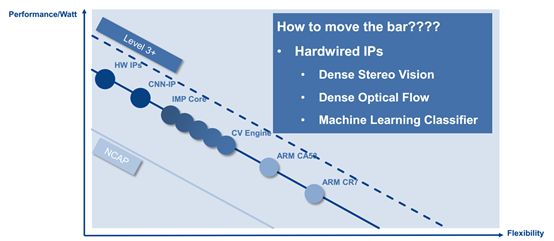

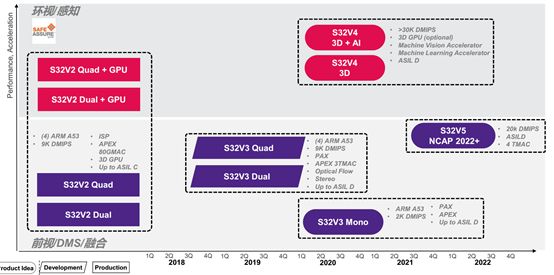

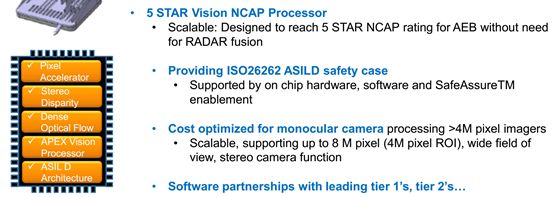

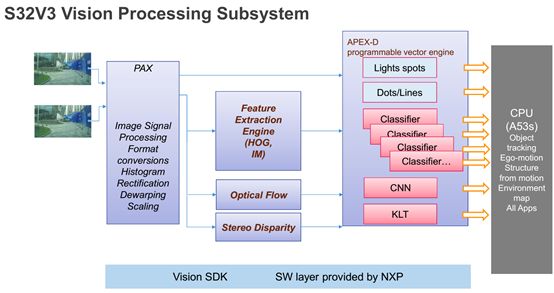

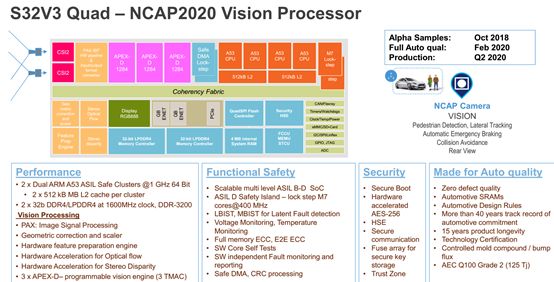

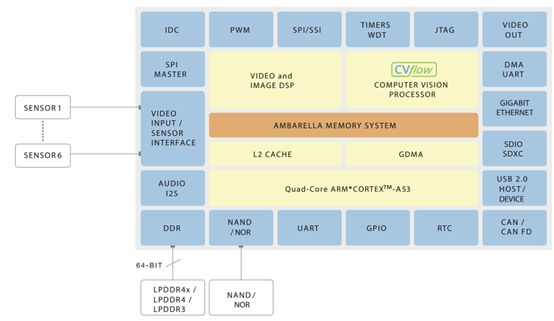

In August 1888, Bertha Benz, the wife of Mercedes-Benz founder Carl Benz, drove the three-wheeled internal-combustion vehicle invented by her husband from Mannheim, Germany, to Pforzheim. This approximately 100-kilometer journey marks the birth of Hyundai Motor. 125 years later, in August 2013, Mercedes-Benz followed the same year's journey and walked again, but this time it was unmanned. In order to pay tribute to the former and the older generation's virtuous support, Mercedes-Benz will not convert the Mercedes-Benz S500. Affectionately known as Bertha. Bertha's core sensor is a 1024 x 440 pixel FOV with a 45 degree binocular. Mercedes has increased the binocular baseline length to 35 centimeters, mainly to cover longer distances. The standard S class binocular is 30 centimeters. The dual-purpose SGM and rod-like pixel calculations are all done using FPGAs, and each frame of the image can do 400,000 independent depth measurements. The rest of the calculation Mercedes uses a 90-degree FOV to identify the traffic lights and uses a 90-degree FOV as the feature-based aid. There are also four 120-degree medium-range millimeter-wave radars. KIT provides high-precision maps, including speed limits, zebra crossings, stop lines, and road curvature. Since it is a suburb, Mercedes-Benz only uses GPS and does not use an external inertial navigation system. This is the L4 unmanned car that is the closest to mass production. Binocular has an overwhelming absolute advantage over monocular, monocular can do it, binocular can do it, but monocular can not do dual purpose 3D stereo vision. Take the traffic light detection as an example. Mobileye's red light video must have been seen. Recognition of traffic lights is one of the most difficult technical points in the perception process. Both Baidu and Google use their own Street View photo gallery resources to utilize prior information. Set a good ROI to improve the accuracy of identifying traffic lights. However, the update of streetscape is very slow. It may not be appropriate in fast-developing China, and it is more appropriate in the United States. Even so, Waymo has appeared in the video of the red light. If there is no street view, the recognition rate of purely single-purpose traffic lights is quite low. Even more lethal is that China's traffic lights are all sorts of strange things. Especially in Tianjin, there are five traffic lights on the road, which is simply a nightmare for unmanned vehicles. The V2X is still far away. At present, there are three methods for recognizing traffic lights with binoculars. The first method is to use target candidate area filtering method to analyze disparity values ​​in the target candidate area and separate the foreground from the background without prior knowledge. The second method is associated with position filtering. This method requires prior knowledge, that is, the statistics of the traffic light's 3-dimensional position information, especially the height of the traffic lights, as well as the straight distance from the zebra crossing and the straight distance from the road edge. When the unmanned vehicle goes online, it can obtain the three-dimensional distance information of the target and filter it with the prior knowledge base. This method can greatly improve the recognition rate of traffic lights. Sometimes only the height of the traffic light is required. Relative to the street view, this prior knowledge can be easily obtained. The third method, the re-projection method. Model the real-world traffic lights, increase the depth measurement data, project the traffic lights and then project them into the traffic light model, and then use the depth measurement data to determine whether the traffic light is a traffic light. No matter which one, it must be much better than a single head. Dual-purpose stereo matching is almost always done with an FPGA. Talents who understand algorithms and understand FPGAs are very rare. FPGAs are also expensive, which limits the use of dual-purpose applications. With FPGA manufacturers all located in the United States, Chinese companies are concerned about this. The United States sells FPGAs with a blind eye. Once it is true, it may not be possible to buy an FPGA with more than 100,000 computing units. The single-purpose application has almost no hardware consideration and is inexpensive, so the application is far more extensive than the binocular. However, this phenomenon will change dramatically in the next few years. Two of the world's top three automotive processor manufacturers have introduced dedicated binocularly designed processors. This is the Renesas R-CAR V3H and NXP S32V3 series. Both have samples in the third quarter of 2018. Binoculars can use no FPGA. First look at Renesas R-CARV3H. R-CAR V3H has now confirmed that it will obtain orders from Nissan and Toyota. Nissan will use R-CAR V3H across the board, including freeway autopilot, remote parking, automatic parking, traffic jam assistance, AEB without restrictions (L2 phase There are many restrictions. R-CAR V3H's computing power reaches 4.2TFLOPS, which exceeds 3TFLOPS of Mobileye EyeQ4. R-CAR V3H has an absolute advantage in manufacturing process. TSMC's 16nm FinFET process surpasses ST's 28nm FD-SOI process. Of course, the Mobileye EyeQ4 is two years ahead of the R-CAR V3H, but the R-CAR V3H also has a powerful CPU system that includes four A53s and one R7 with lockstep function, which means that R-CAR V3H should be able to meet ISO 26262 ASIL-A or Class B standards, but not Mobileye, can only pass the AECQ-100 Level 1 standard. The picture above shows the internal framework of R-CAR V3H. R-CAR V3H targets L3+-class vehicles and includes stereo parallax and optical flow hard core IP. The efficiency is comparable to that of FPGAs, and may be slightly better than that of FPGAs. NXP plans to release S32V3 samples in the third quarter of 2018. It is also a stereoscopic parallax and optical flow hard IP like the V3H. S32V3 up to ASIL-D level, security level far exceeds Mobileye. S32V3 internal frame diagram In addition to the two top companies, NXP and Renesas, as well as the product of the driving recorder chip giant Anba, the computing performance is even stronger than that of Mobileye, which will soon be available in the third quarter of 2018. Anba acquired VisLab, an Italian start-up company, for US$30 million in 2015. This company was founded by a team from the Italian University of Parma. At present, its founder, Professor Alberto Broggi, heads the Anba Automated Driving Business. Anba's first-generation automatic driving chip CV1 can correspond to two 8-megapixel binocular stereo visions with an operating power of 2TFLOPS. Using Sony's IMX317 image sensor, the pixel accuracy can reach 3840*1728. Such a high pixel can reach an effective distance of 300 meters even when the FOV is 75 degrees and 30 centimeters, which is far more than the effective distance of most laser radars. The effective distance of the laser radar and the reflectance of the object are very close. Usually, the manufacturer only gives the effective distance when the reflectance is 80%. For white vehicles, the reflectivity may be only 10%, and the effective distance will be shortened to 1/3 of the 80%. Even less. General MEMS laser radars have effective distances of only 30 to 70 meters at 10% reflectance, and the mechanical rotary type is slightly better. CV1 can directly output a disparity map with a frame rate of one frame per second. At the same time, it also includes roadblocks or barrier detection, roadside and lane detection, traffic signal detection, and general obstacle detection. The CV1 is only Aiba's water testing work. Ambarna launched the CV2AQ in early 2018 and its computing performance is increased by 10 times. It is approximately 14TFLOPS. Samsung's 10-nanometer process is manufactured and passed the AEC-Q100 Level 2 standard. This chip is extremely powerful, can handle 32 million pixels of data at the same time, can correspond to 6 binoculars at the same time, including two 8 million pixels binocular and 4 200 million pixels binocular. The CV1 can only correspond to a single 8-megapixel monocular. With the bottleneck of FPGAs being broken, dual-purpose large-scale applications are coming! Shenzhen Ever-smart Sensor Technology Co., LTD , https://www.fluhandy.com